Infrastructure as Code in Google Cloud

A guide to get started with Infrastructure as code tools in Google Cloud.

Raywon Kari - Published on July 15, 2020 - 9 min read

Introduction

Infrastructure as Code (IaC), is a practice which enables the use of automation to manage resources declaratively such as provisioning, configuring, updating, de-commissioning etc. More theory around this topic can be found on wikipedia. IaC practises have gained a lot of popularity because it brings lot of consistency, predictability, reusability into the system.

Since this is the cloud era at the moment, cloud providers more or less have given the same ability to use IaC practises to manage cloud infrastructure, and IaC in Google Cloud Platform is powered by a service called Deployment Manager. Using it, we can declaratively define cloud resources in YAML 📃 templates or generate the templates using python or jinja and deploy them. In this example, we will use a mix of YAML template and python. Rest is taken care by the service itself.

In this blog post, we will explore the basics of deployment manager, how to use it by taking an example.

Basics of Google Cloud Platform

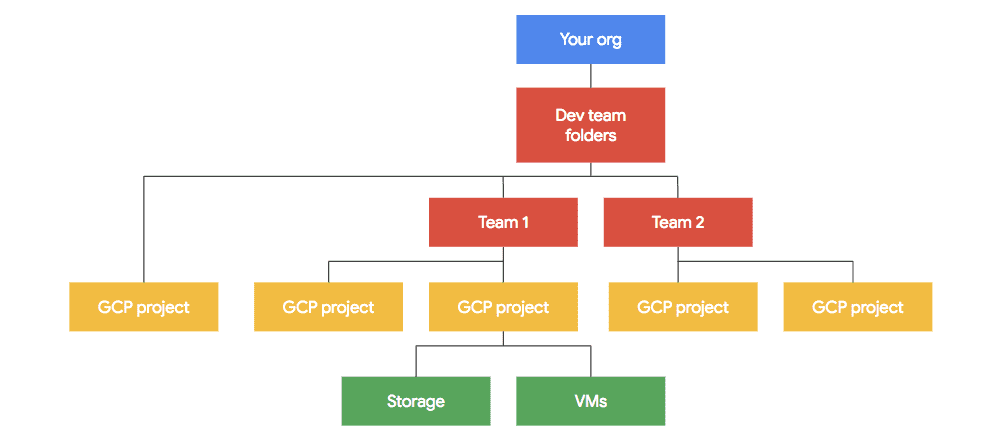

Google Cloud Platform is organised as illustrated in the following diagram:

It is important to understand the hierarchy of the platform. All cloud resources belong to a particular project, projects belong to teams or grouped into folders, which are owned by an organisation. Deployment manager primarily works with projects and resources in a project.

Basics of Google Deployment Manager

When we go out to a restaurant, what do we usually do 🍛 ?

We tell what we want to eat, we get the food 😄 and at the end we pay the bill.

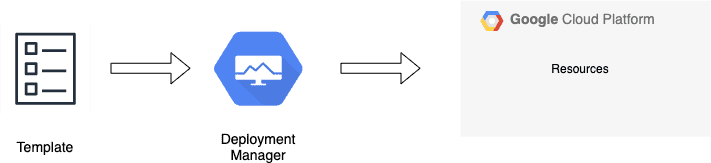

That is exactly what is happening with Deployment Manager as well. We tell what we want in the templates, we upload the template, and it creates and configures the stuff for us. So writing the template is ordering the food, creating and configuring the resources is getting the food, and paying the bill here is the common aspect 😉.

Using deployment manager, we can specify all the resources we need in a declarative format using YAML. We can also use python or jinja templates to parameterize the configuration which enables re-usability. Here we are embracing configuration as code practises. All of these enables us to perform repetative deployments with consistency and predactibility.

In short, we can make use of deployment manager in two ways:

- By writing the actual infrastructure as code in YAML files.

- By writing a template which will generate the infrastructure as code in YAML syntax, and referencing the template in other config files, and executing deployment manager using this config file. We follow this approach in this blog post.

Here is a simple workflow when using deployment manager:

Playing with Templates

A template or configuration file is the heart of deployment manager service. Therefore it is important to understand how to construct or generate a template.

A template must consist of resources section, where we need to declare what resources we need, and optionally other sections such as outputs and metadata can be used. In a config file, we need imports as well, to import any templates to construct the deployment files.

Here is an example of a configuration file:

imports:- path: /path/to/template/01name: template-one- path: /path/to/template/02name: template-tworesources:- name: resource_name_01type: type_of_resource_01...- name: resource_name_02type: type_of_resource_02...

Here, imports section can contain a list of templates, which are used by this configuration file. Deployment manager resursively expands these imported templates

to form the final configuration under the hood. resources section can consist of any google cloud resources which will be created by this file. It can consist of name, type, properties. It can optionally consist of outputs and metadata definitions in the YAML file.

Detailed documentation on templating and configuration files can be found here.

Now let's consider an example and deploy it to google cloud. We will create a cloud function which will simply return hello world and the time at which the request was received. Before diving into deployment manager, let's take a look at the prerequisites.

Prerequisites:

Firstly, we need to install the google cloud CLI utility called Google Cloud SDK. Installation instructions for all platforms can be found here. After the installation is done, verify the installation.

# My CLI has following version at the time of writing> gcloud --versionGoogle Cloud SDK 301.0.0bq 2.0.58core 2020.07.10gsutil 4.51

After the installation is done, we need to configure our CLI session against our google cloud account. I do it like this:

gcloud init# this command is an interactive one.# It will ask you a few questions about which account and project to use# If no projects are available, it will ask you to create one.# Select yes, and give it a name/ID

Once the configuration is done, you can check which account you have activated by running the following command:

> gcloud auth list# ResponseCredentialed AccountsACTIVE ACCOUNT* xxxx@gmail.com

Now that our CLI is ready, lets move on to the next step i.e., Enabling Billing & APIs . For enabling Billing, navigate to Billing in the google cloud console and enable it from there. Google Cloud comes with various services, and each service has an API. Not all APIs are enabled by default. Therefore we need to explicitly enable them.

Navigate to the API Library and enable the following APIs if you have not enabled them yet, here https://console.cloud.google.com/apis/library .

- Cloud Build API

- Cloud Deployment Manager V2 API

- Cloud Functions API

- Cloud Storage

Example Deployment:

Now that our CLI is configured, and APIs are enabled, lets move on to deploying a few resources using deployment manager. Firstly, let's create a simple JS code, which we will deploy to Cloud Functions. I am using the following JS code:

const current_datetime = new Date()const formatted_time = current_datetime.getFullYear() + "-" + (current_datetime.getMonth() + 1) + "-" + current_datetime.getDate() + " " + current_datetime.getHours() + ":" + current_datetime.getMinutes() + ":" + current_datetime.getSeconds()exports.handler = function(req, res) {console.log(req.body.message);res.status(200).send({hello: 'world',request_received_at: formatted_time});};

Let's prepare our template files which will be used by deployment manager to deploy this code as a cloud function.

Let's create two files namely cloud_function.py & cloud_function.yaml.

Here are my definitions in the yaml config file:

imports:- path: cloud_function.py #template- path: function/index.js #cloud function coderesources:- name: functiontype: cloud_function.pyproperties:codeLocation: function/codeBucket: my-cloud-function-bucket-raywoncodeBucketObject: function.ziplocation: us-central1timeout: 60sruntime: nodejs8availableMemoryMb: 256entryPoint: handler

The template file cloud_function.py is used to generate the infrastructure as code syntax which allows deployment manager to create resources for us.

You can find the template file here.

In the template, we specify the cloud build step used to store the code, and the cloud function.

As you can see, we are storing the cloud function source code in a storage bucket. Therefore, let's create that bucket now.

I am using the CLI tool gsutil to create the bucket. It comes packaged with the cloud SDK.

gsutil mb -p xxxxxxxx -c STANDARD \-l europe-north1 gs://my-cloud-function-bucket-raywon# mb => make bucket# p => project ID# -c => storage class# -l => location# followed by the bucket name

Now that our bucket is ready, let's deploy the function. I am using the gcloud CLI to deploy.

gcloud deployment-manager deployments create my-google-cloud-function --config cloud_function.yaml# This command will take a minute or two.# It will deploy the function to google cloud using cloud build.# the source code zip is stored in storage bucket.

After the deployment is done, it is time to query our cloud function. We have two ways to query cloud functions. Using simple CURL calls, or the gcloud CLI tool.

Using CURL:

curl https://us-central1-xxxxxxxx.cloudfunctions.net/my-google-cloud-function \-H "Authorization: bearer $(gcloud auth print-identity-token)"# Response{"hello":"world","request_received_at":"2020-7-15 14:19:26"}

Using gcloud:

gcloud functions call my-google-cloud-function# ResponseexecutionId: xxxxxxxxresult: '{"hello":"world","request_received_at":"2020-7-15 14:21:41"}'

As you can see from the CURL call, we are passing some authorization bearer tokens. This is because, google have updated how they treat security with cloud functions. Currently, by default all deployed cloud functions are private by default and requires IAM auth to access cloud functions.

Therefore, we use the gcloud auth command to get a token and pass that in the request.

gcloud functions call don't need any explicit reference of tokens, because we have authenticated initially using gcloud init.

Summary:

- We have installed the CLI utility, gcloud and initialized the client.

- Enabled APIs in google cloud.

- Configured a storage bucket.

- Created config file and a template file.

- Created a simple JS code which is used as a cloud function.

- Deployed the code using deployment manager, and tested the cloud function.

Full documentation can be found here and a few tutorials can be found here.

You can find all the code used in this blog post here.

If you have any questions/thoughts/feedback, feel free to contact me in any platform you prefer.